06 May 2020

by Marcus Tomalin and Stefanie Ullmann

In recent years, the automatic detection of online hate speech has become an active research topic in machine learning. This has been prompted by increasing anxieties about the prevalence of hate speech on social media, and the psychological and societal harms that offensive messages can cause. These anxieties have only increased in recent weeks as many countries have been in lockdown due to the Covid-19 pandemic (L1GHT Toxicity during Coronavirus report). António Guterres, the Secretary-General of the United Nations has explicitly acknowledged that the ongoing crisis has caused a marked increase in hate speech.

Quarantining redirects control back into the hands of the user. No one should be at the mercy of someone else’s senseless hate and abuse, and quarantining protects users whilst managing the balancing act of ensuring free speech and avoiding censorship.

Stefanie Ullmann

Online hate speech presents particular problems, especially in modern liberal democracies, and dealing with it forces us to reflect carefully upon the tension between free speech (i.e., allowing people to say what they want) and protective censorship (i.e., safeguarding vulnerable groups from abusive or threatening language). Most social media sites have adopted self-imposed definitions, guidelines, and policies for handling toxic messages, and human beings employed as content moderators determine whether or not certain posts are offensive and should be removed. However, this framework is unsustainable. For a start, the offensive posts are only removed retrospectively, after the harm has already been caused. Further, there are far too many instances of hate speech for human moderators to assess them all. In addition, it is problematical that unelected corporations such as Facebook and Twitter should be the gate-keepers of free speech. Who are they to regulate our democracies by deciding what we can and can’t say?

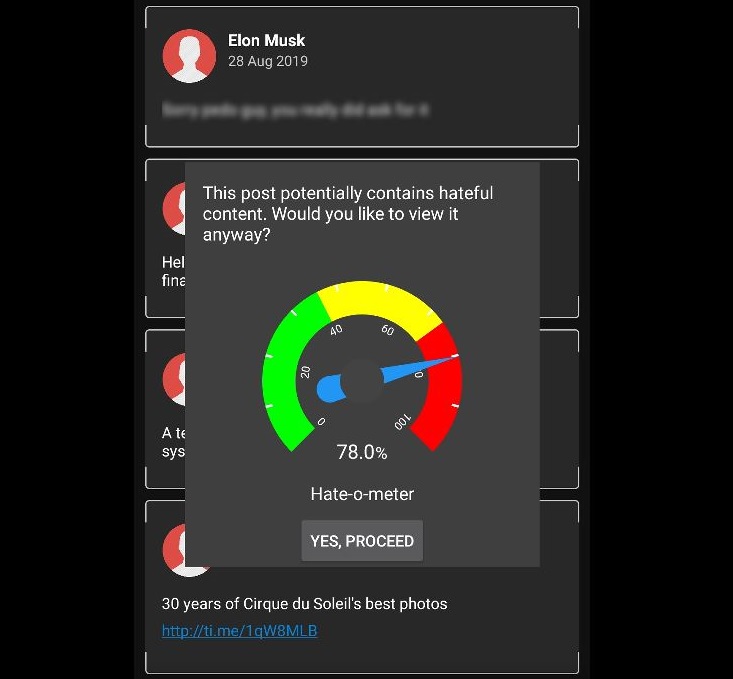

Towards the end of 2019, two Cambridge-based researchers, Dr Marcus Tomalin and Dr Stefanie Ullmann, proposed a different approach. Their framework demonstrated how an automated hate speech detection system could be used to identify a message as being offensive before it was posted. The message would then be temporarily quarantined, and the intended recipient would receive a warning message, indicating the degree to which the quarantined message may be offensive. That person could then choose either to read the message, or else to prevent it appearing. This approach achieves an appropriate balance between libertarian and authoritarian tendencies: it allows people to write whatever they want, but recipients are also free to read only those messages they wish to read. Crucially, this framework obviates the need for corporations or national governments to make decisions about which messages are acceptable and which are not. As Dr Ullmann puts it, “quarantining redirects control back into the hands of the user. No one should be at the mercy of someone else’s senseless hate and abuse, and quarantining protects users whilst managing the balancing act of ensuring free speech and avoiding censorship.”

A 4th-year student in the Engineering Department, Nicholas Foong who is supervised by Dr Tomalin, has now developed both a state-of-the-art automatic hate speech detection system, and an app that demonstrates how the system can be used to quarantine offensive messages by blurring them until the recipient actively chooses to read them. An Android version of the app is available, along with a short demo video of the app in action.

The state-of-the art system is able to correctly identify up to 91% of offensive posts. In the app, it is used to automatically detect and quarantine hateful posts in a simulated social media feed in real-time. The app demonstrates that the trained system can run locally on mobile phones, taking up just 9MB of space and requiring no internet connection to function.

Nicholas Foong, app developer, Department of Engineering Cambridge

Despite these promising developments, there is still a lot of work that needs to be done if the problem of online hate speech is going to be solved convincingly. The detection systems themselves need to be able to cope with different linguistic registers and styles (e.g., irony, satire), and the training data must be annotated accurately, to avoid introducing unwanted biases. In addition, since hate speech increasingly contains both words and images, the next generation of automated detection systems will need to handle multimodal input. Nonetheless, the quarantining framework offers an effective practical way of incorporating such technologies into our regular online interactions. And, as we adjust to life in lockdown, we can perhaps appreciate more than ever how quarantining can help to keep us all safe.