A report of the event as well as videos of the talks can be found here.

The workshop will consider the social impact of Artificially Intelligent Communications Technology (AICT). Specifically, the talks and discussions will focus on different aspects of the complex relationships between language, gender, and technology. These issues are of particular relevance in an age when Virtual Personal Assistants such as Siri, Cortana, and Alexa present themselves as submissive females, when most language-based technologies manifest glaring gender-biases, when 78% of the experts developing AI systems are male, when sexist hate speech online is a widely-recognised problem and when many Western cultures and societies are increasingly recognising the significance of non-binary gender identities.

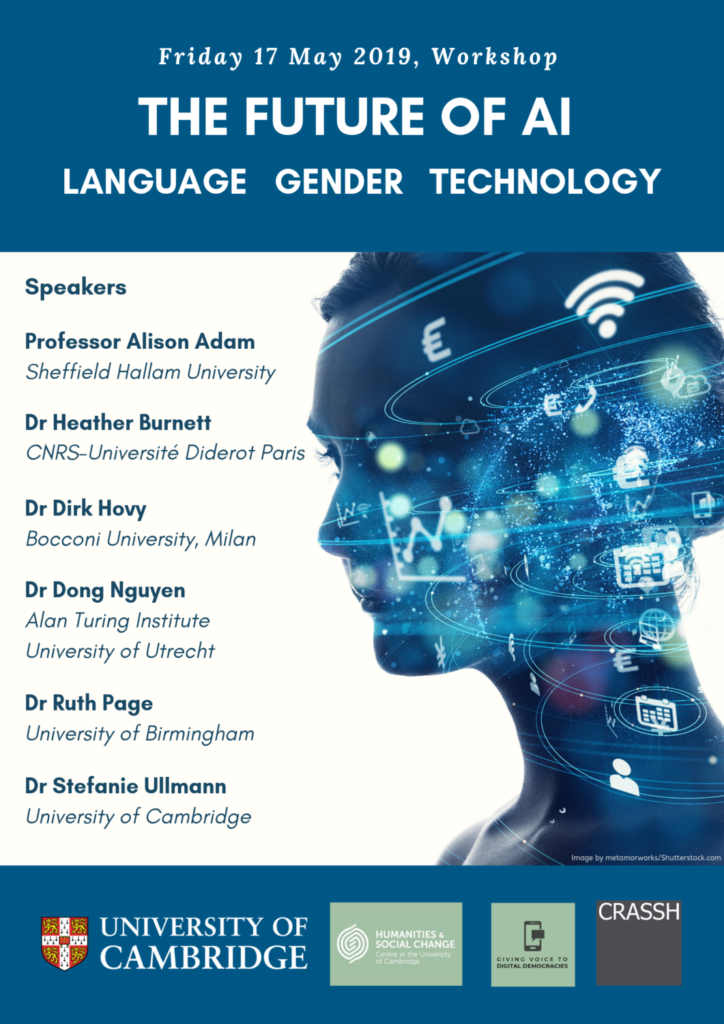

Speakers

Professor Alison Adam, Sheffield Hallam University

Dr Heather Burnett, CNRS-Université Paris Diderot

Dr Dirk Hovy, Bocconi University

Dr Dong Nguyen, Alan Turing Institute, University of Utrecht

Dr Ruth Page, University of Birmingham

Dr Stefanie Ullmann, University of Cambridge

The workshop is organised by Giving Voice to Digital Democracies: The Social Impact of Artificially Intelligent Communications Technology, a research project which is part of the Centre for the Humanities and Social Change, Cambridge and funded by the Humanities and Social Change International Foundation.

Giving Voice to Digital Democracies explores the social impact of Artificially Intelligent Communications Technology – that is, AI systems that use speech recognition, speech synthesis, dialogue modelling, machine translation, natural language processing, and/or smart telecommunications as interfaces. Due to recent advances in machine learning, these technologies are already rapidly transforming our modern digital democracies. While they can certainly have a positive impact on society (e.g. by promoting free speech and political engagement), they also offer opportunities for distortion and deception. Unbalanced data sets can reinforce problematical social biases; automated Twitter bots can drastically increase the spread of malinformation and hate speech online; and the responses of automated Virtual Personal Assistants during conversations about sensitive topics (e.g. suicidal tendencies, religion, sexual identity) can have serious consequences.

Responding to these increasingly urgent concerns, this project brings together experts from linguistics, philosophy, speech technology, computer science, psychology, sociology and political theory to develop design objectives for the creation of AICT systems that are more ethical, trustworthy and transparent. These technologies will have the potential to affect more positively the kinds of social change that will shape modern digital democracies in the immediate future.

Please register for the workshop here.

Queries: Una Yeung (uy202@cam.ac.uk)

Image by metamorworks/Shutterstock.com