Inaugural workshop

25 March 2019

Centre for research in the arts, social sciences and humanities (crassh), cambridge

The Giving Voice to Digital Democracies project hosted its inaugural workshop on 25th March 2019 at the Centre for Research in the Arts, Social Sciences and Humanities (CRASSH). The project is part of the Centre for the Humanities and Social Change, Cambridge, funded by the Humanities and Social Change International Foundation.

This significantly oversubscribed event brought together experts from academia, government, and industry, enabling a diverse conversation and discussion with an engaged audience. The one-day workshop was opened by Project Manager Dr Marcus Tomalin who summarised the main purposes of the project and workshop.

The focus was specifically on the ethical implications of Artificially Intelligent Communications Technology (AICT). While discussions about ethics often revolve around issues such as data protection and privacy, transparency and accountability (all of which are important concerns), the impact that language-based AI systems have upon our daily lives is a topic that has previously received comparatively little (academic) attention. Some of the central issues that merit careful consideration are:

- What might more ethical AICT systems look like?

- How could users be better protected against hate speech on social media?

- (How) can we get rid of data bias inherent in AICT systems?

These and other questions were not only key on the agenda for the workshop, but will continue to be central research objectives for the project over the next 3½ years.

Olly Grender (House of Lords Select Committee on Artificial Intelligence) was the first main speaker, and she argued that we need to put ethics at the centre of AI development. This is something to which the UK is particularly well-placed to contribute. She emphasised the need to equip people with a sufficiently deep understanding not only of AI, but also of the fundamentals of ethics. This will help to ensure that the prejudices of the past are not built into automated systems. She also emphasised the extent to which the government is focusing on these. The creation of the Centre for Data Ethics and Innovation is a conspicuous recent development, and numerous white papers about such matters have been, and will be, published. The forthcoming white paper concerning ‘online harm’ will be especially influential, and the Giving Voice to Digital Democracies project has been involved in preparing that paper.

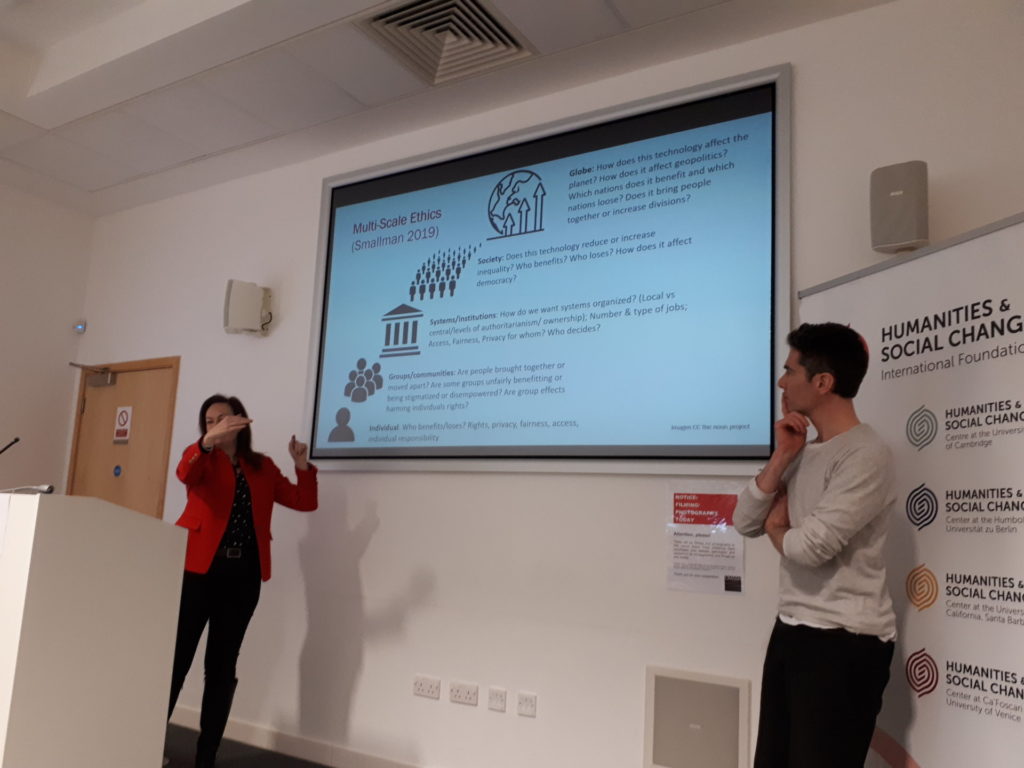

In her talk, Dr Melanie Smallman (University College London, Alan Turing Institute) proposed a multi-scale ethical framework to combat social inequality caused and magnified by technology. In essence, she suggested that the ethical contexts at different levels of the hierarchy, from individual members of society to vast corporations, can differ greatly. Something that seems ethical justifiable at one level may not be at another level. These different scales need to be factored into the process of developing language-based AI systems. As Smallman reminded us, “we need to make sure that technology does something good”.

Dr Adrian Weller (University of Cambridge, Alan Turing Institute, The Centre for Data Ethics and Innovation) gave an overview of various ethical issues that arise in relation to cutting-edge AI systems. He emphasised that we must take measures to ensure that we can trust the AI systems we create. He argued that we need to make sure people have a better understanding of when AI systems are likely to perform well, and when they are likely to go awry. While such systems are extremely powerful and effective in many respects, they can also be alarmingly brittle, and can make mistakes (e.g., classificatory errors) of a kind that no human would make.

In his talk, Dr Marcus Tomalin (University of Cambridge) stressed that traditional thinking about ethics is inadequate for discussions of AICT systems. A more appropriate ethical framework would be ontocentric rather than predominantly anthropocentric, and patient-oriented rather than merely agent-oriented. He also argued that algorithmic decision-making can be hard to analyse in relation to AICT systems. For instance, it is not at all simple to determine where and when a machine translation system makes the decision to translate a specific word in a specific way. Yet such ‘decisions’ can have serious ethical consequences.

Professor Emily M. Bender (University of Washington) presented a typology of ethical risks in language technology and asked the question: ‘how can we make the processes underlying NLP technologies more transparent?’ Her work centres on the foregrounding of characteristics of data sets in so-called ‘data statements’, providing information (e.g., nature of the data, whose language, speech situation, etc.) about data at all times. The underlying conviction that such statements would help system designers to appreciate in advance the impact that a specific data set may have on the system being constructed (e.g., whether or not it would reinforce an existing bias).

Dr Margaret Mitchell (Google Research and Machine Intelligence) also discussed the problem of data bias. She showed that such biases are manifold and that they interact with machine learning processes at various stages and levels. This is sometimes referred to as ‘bias network effect’ or ‘bias laundering’. Adopting an approach that was similar in spirit to the aforementioned ‘data statements’, she proposed the implementation of ‘model cards’ at the processing level.

The workshop ended with a roundtable discussion involving the various speakers, with many of the questions coming from the audience. This provided an opportunity to consider some of the core ideas in greater detail and to compare and contrast some of the ideas and approaches that had been presented earlier in the day.

The considerable interest that this inaugural workshop generated confirms once again the great need for genuinely interdisciplinary events of this kind, which bring together researchers and experts from technology, the humanities, and politics to reflect upon the social impact of the current generation of AI systems – and especially those systems that interact with us using language.

Olly Grender (left), Marcus Tomalin (middle), Stefanie Ullmann (right)

Marcus Tomalin

Adrian Weller

Melanie Smallman (left), Marcus Tomalin (right)

Workshop audience

Roundtable discussion (from left to right): Marcus Tomalin, Olly Grender, Margaret Mitchell, Melanie Smallman, Emily M. Bender

Text by Stefanie Ullmann and Marcus Tomalin

Pictures taken by Imke van Heerden and Stefanie Ullmann

Videos filmed and edited by Glenn Jobson